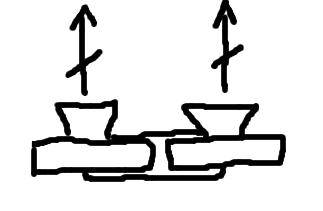

I'm a little confused about what kind of camera setup creates the best anaglyph images. All of the custom hardware setups I've read about people using either have identical cameras exactly side-by-side and pointing in the same direction,

,

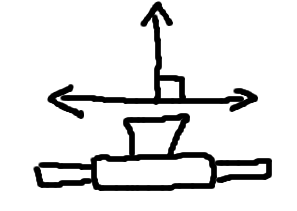

,or a single camera mounted so it can slide right and left perpendicular to the direction of the lens,

.

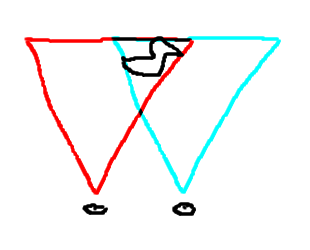

.Either of these setups result in two images that reproduce the effect of looking at an object (a duck, say) with your eyes facing exactly the same direction:

.

.(Those are eyeballs at the bottom.)

And that's the kind of configuration I've been using for the anaglyph 3D graphics display: move the virtual camera slightly to the left and render the red channel, then move the virtual camera slightly to the right and render the blue and green channels.

But I think the way human vision actually works is that both eyeballs sort of independently point at whatever you're focusing on. Which is why when a butterfly flies up to you and lands on your nose you end up crossing your eyes. More like this:

.

.I think that's more like how I take 3D photos with my digital camera. I don't have a horizontally-sliding mount or two identical cameras, so I just take one picture pointing at an object, move over, and then take another pointing at the same object. I think the result should be more like looking at an object in real life, assuming the viewer is focusing where you expect them to be.

I'm going to try this out in my graphics setup and see how it works.